| |

|

The "RAID" acronym first appeared in 1988

in the earliest of the Berkeley Papers written by Patterson,

Gibson & Katz of the University of California at

Berkeley. The RAID Advisory Board has since substituted

"Independent" for "Inexpensive". A series of papers written

by the original three authors and others defined and

categorized several data protection and mapping models for

disk arrays. Some of the models described in these papers,

such as mirroring, were known at the time, others were new.

The word levels used by the authors to differentiate the

models from each other may suggest that a higher numbered

RAID model is uniformly superior to a lower numbered one.

This is not the case.

RAID 0 (Striping)

RAID 0 (Striping)

RAID 0: Striped Disk Array without

Fault Tolerance

RAID Level 0 requires a minimum of 2 drives to

implement.

RAID Level 0 is a performance oriented striped

data mapping technique. Uniformly sized blocks of storage are

assigned in regular sequence to all of an array's disks. RAID

Level 0 provides high I/O performance at low inherent cost. (No

additional disks are required). The reliability of RAID Level 0,

however is less than that of its member disks due to its lack of

redundancy. Despite the name, RAID Level 0 is not actually RAID,

unless it is combined with other technologies to provide data and

functional redundancy, regeneration and rebuilding.

Advantages: RAID 0 implements a striped disk

array, the data is broken down into blocks and each block is

written to a separate disk drive. I/O performance is greatly

improved by spreading the I/O load across many channels and

drives. Best performance is achieved when data is striped across

multiple controllers with only one drive per controller. No parity

calculation overhead is involved. Very simple design. Easy to

implement.

Disadvantages: Not a "True" RAID because it is

NOT fault-tolerant. The failure of just one drive will result in

all data in an array being lost. Should never be used in mission

critical environments.

Recommended Applications: Video Production

and Editing; Image Editing; Pre-Press Applications; Any

application requiring high bandwidth.

[ TOP ]

RAID 1 (Mirroring) RAID 1 (Mirroring)

RAID 1: Mirroring and Duplexing

For

Highest performance, the controller must be able to perform two

concurrent separate Reads per mirrored pair or two duplicate

Writes per mirrored pair.

RAID Level 1 requires a minimum of 2

drives to implement.

RAID Level 1, also called mirroring, has been

used longer than any other form of RAID. It remains popular

because of its simplicity and high level of reliability and

availability. Mirrored arrays consist of two or more disks. Each

disk in a mirrored array holds an identical image of user data. A

RAID Level 1 array may use parallel access for high transfer rate

when reading. More commonly, RAID Level 1 array members operate

independently and improve performance for read-intensive

applications, but at relatively high inherent cost. This is a good

entry-level redundant system, since only two drives are

required.

Advantages: One Write or two Reads possible per

mirrored pair. Twice the Read transaction rate of single disks.

Same write transaction rate as single disks. 100% redundancy of

data means no rebuild is necessary in case of a disk failure, just

a copy to the replacement disk. Transfer rate per block is equal

to that of a single disk. Under certain circumstances, RAID 1 can

sustain multiple simultaneous drive failures. Simplest RAID

storage subsystem design.

Disadvantages: Highest disk overhead of all RAID

types (100%) - inefficient. Typically the RAID function is done by

system software, loading the CPU/Server and possibly degrading

throughput at high activity levels. Hardware implementation is

strongly recommended. May not support hot swap of failed disk when

implemented in "software".

Recommended Applications: Accounting;

Payroll; Financial; Any application requiring very high

availability.

[ TOP ]

RAID 0+1 RAID 0+1

RAID 0+1: High Data Transfer

Performance

RAID Level 0+1 requires a minimum of 4 drives to

implement.

RAID Level 0+1 is a striping and mirroring

combination without parity. RAID 0+1 has fast data access (like

RAID 0), and single-drive fault tolerance (like RAID 1). RAID 0+1

still requires twice the number of disks (like RAID 1).

Advantages: RAID 0+1 is implemented as a mirrored

array whose segments are RAID 0 arrays. RAID 0+1 has the same

fault tolerance as RAID level 5. RAID 0+1 has the same overhead

for fault-tolerance as mirroring alone. High I/O rates are

achieved thanks to multiple stripe segments. Excellent solution

for sites that need high performance but are not concerned with

achieving maximum reliability.

Disadvantages: RAID 0+1 is NOT to be confused

with RAID 10. A single drive failure will cause the whole array to

become, in essence, a RAID Level 0 array. Very expensive / High

overhead. All drives must move in parallel to proper track

lowering sustained performance. Very limited scalability at a very

high inherent cost.

Recommended Applications: Imaging applications; General fileserver.

[ TOP ]

RAID 2 (ECC) RAID 2 (ECC)

RAID 2: Hamming Code ECC.

Each bit of

data word is written to a data disk drive (4 in this example: 0 to

3). Each data word has its Hamming Code ECC word recorded on the

ECC disks. On Read, the ECC code verifies correct data or corrects

single disk errors.

RAID Level 2 is one of two inherently parallel

mapping and protection techniques defined in the Berkeley paper.

It has not been widely deployed in industry largely because it

requires special disk features. Since disk production volumes

determine cost, it is more economical to use standard disks for

RAID systems.

Advantages: "On the fly" data error correction.

Extremely high data transfer rates possible. The higher the data

transfer rate required, the better the ratio of data disks to ECC

disks. Relatively simple controller design compared to RAID levels

3,4 & 5.

Disadvantages: Very high ratio of ECC disks to

data disks with smaller word sizes - inefficient. Entry level cost

very high - requires very high transfer rate requirement to

justify. Transaction rate is equal to that of a single disk at

best (with spindle synchronization). No commercial implementations

exist / not commercially viable.

[ TOP ]

RAID 3 RAID 3

RAID 3: Parallel transfer with Parity

The data block is subdivided ("striped") and written on the data

disks.

Stripe parity is generated on Writes, recorded on the

parity disk and checked on Reads.

RAID Level 3 requires a

minimum of 3 drives to implement. RAID Level 3 adds redundant information in the

form of parity to a parallel access striped array, permitting

regeneration and rebuilding in the event of a disk failure. One

stripe of parity protects corresponding strip's of data on the

remaining disks. RAID Level 3 provides for high transfer rate and

high availability, at an inherently lower cost than mirroring. Its

transaction performance is poor, however, because all RAID Level 3

array member disks operate in lockstep.

RAID 3 utilizes a striped set of three or more

disks with the parity of the strips (or chunks) comprising each

stripe written to a disk. Note that parity is not required to be

written to the same disk. Furthermore, RAID 3 requires data to be

distributed across all disks in the array in bit or byte-sized

chunks. Assuming that a RAID 3 array has N drives, this ensures

that when data is read, the sum of the data-bandwidth of N - 1

drives is realized. The figure below illustrates an example of a

RAID 3 array comprised of three disks. Disks A, B and C comprise

the striped set with the strips on disk C dedicated to storing the

parity for the strips of the corresponding stripe. For instance,

the strip on disk C marked as P(1A,1B) contains the parity for the

strips 1A and 1B. Similarly the strip on disk C marked as P(2A,2B)

contains the parity for the strips 2A and 2B.

Advantages: Very high Read data transfer rate.

Very high Write data transfer rate. Disk failure has an

insignificant impact on throughput. Low ratio of ECC (Parity)

disks to data disks means high efficiency. RAID 3 ensures that if

one of the disks in the striped set (other than the parity disk)

fails, its contents can be recalculated using the information on

the parity disk and the remaining functioning disks. If the parity

disk itself fails, then the RAID array is not affected in terms of

I/O throughput but it no longer has protection from additional

disk failures. Also, a RAID 3 array can improve the throughput of

read operations by allowing reads to be performed concurrently on

multiple disks in the set.

Disadvantages: Transaction rate equal to that of

a single disk drive at best (if spindles are synchronized). Read

operations can be time-consuming when the array is operating in

degraded mode. Due to the restriction of having to write to all

disks, the amount of actual disk space consumed is always a

multiple of the disks' block size times the number of disks in the

array. This can lead to wastage of space. Controller design is

fairly complex. Very difficult and resource intensive to do as a

"software" RAID.

Recommended Applications: Video Production and

live streaming; Image Editing; Video Editing; Prepress

Applications; Any application requiring high

throughput.

[ TOP ]

RAID 4 RAID 4

RAID 4: Independent Data disks with

Shared Parity disk Each entire block is written onto a data disk.

Parity for same rank blocks is generated on Writes, recorded on

the parity disk and checked on Reads.

RAID Level 4 requires a

minimum of 3 drives to implement.

Like RAID Level 3, RAID Level 4 uses parity

concentrated on a single disk to protect data. Unlike RAID Level

3, however, a RAID Level 4 array's member disks are independently

accessible. Its performance is therefore more suited to

transaction I/O than large file transfers. RAID Level 4 is seldom

implemented without accompanying technology, such as write-back

cache, because the dedicated parity disk represents an inherent

write bottleneck.

Advantages: Very high Read data transaction rate.

Low ratio of ECC (Parity) disks to data disks means high

efficiency. High aggregate Read transfer rate.

Disadvantages: Quite complex controller design.

Worst Write transaction rate and Write aggregate transfer rate.

Difficult and inefficient data rebuild in the event of disk

failure. Block Read transfer rate equal to that of a single

disk.

[ TOP ]

RAID 5 RAID 5

RAID 5: Independent Data disks with

Distributed Parity blocks Each entire data block is written on a

data disk; parity for blocks in the same rank is generated on

Writes, recorded in a distributed location and checked on Reads.

The array capacity is N-1.

RAID Level 5 requires a minimum of

3 drives to implement. By distributing parity across some or all of an

array's member disks, RAID Level 5 reduces (but does not

eliminate) the write bottleneck inherent in RAID Level 4. As with

RAID Level 4, the result is asymmetrical performance, with reads

substantially outperforming writes. To reduce or eliminate this

intrinsic asymmetry, RAID level 5 is often augmented with

techniques such as caching and parallel multiprocessors.

The figure below illustrates an example of a RAID

5 array comprised of three disks - disks A, B and C. For instance,

the strip on disk C marked as P(1A,1B) contains the parity for the

strips 1A and 1B. Similarly the strip on disk A marked as P(2B,2C)

contains the parity for the strips 2B and 2C. RAID 5 ensures that

if one of the disks in the striped set fails, its contents can be

extracted using the information on the remaining functioning

disks. It has a distinct advantage over RAID 4 when writing since

(unlike RAID 4 where the parity data is written to a single drive)

the parity data is distributed across all drives. Also, a RAID 5

array can improve the throughput of read operations by allowing

reads to be performed concurrently on multiple disks in the

set.

Advantages: Highest Read data transaction rate.

Medium Write data transaction rate. Low ratio of ECC (Parity)

disks to data disks means high efficiency. Good aggregate transfer

rate.

Disadvantages: Disk failure has a medium impact

on throughput. Most complex controller design. Difficult to

rebuild in the event of a disk failure (as compared to RAID level

1). Individual block data transfer rate same as single disk.

Recommended Applications: File and Application servers; Database

servers; WWW, E-mail, and News servers; Intranet servers; Most

versatile RAID level.

[ TOP ]

RAID 6 RAID 6

RAID 6: Independent Data disks with

two Independent Distributed Parity schemes.

Advantages: RAID 6 is essentially an extension of

RAID level 5 which allows for additional fault tolerance by using

a second independent distributed parity scheme (two-dimensional

parity). Data is striped on a block level across a set of drives,

just like in RAID 5, and a second set of parity is calculated and

written across all the drives. RAID 6 provides for an extremely

high data fault tolerance and can sustain multiple simultaneous

drive failures. Perfect solution for mission critical

applications.

Disadvantages: Very complex controller design.

Controller overhead to compute parity addresses is extremely high.

Very poor write performance. Requires N+2 drives to implement

because of two-dimensional parity scheme.

[ TOP ]

RAID 7

(Proprietary) RAID 7

(Proprietary)

RAID 7: Optimized Asynchrony for High

I/O Rates as well as High Data Transfer Rates.

Architectural Features: All I/O transfers are

asynchronous, independently controlled and cached including host

interface transfers. All Reads and Write are centrally cached via

the high speed x-bus. Dedicated parity drive can be on any

channel. Fully implemented process oriented real time operating

system resident on embedded array control microprocessor. Embedded

real time operating system controlled communications channel. Open

system uses standard SCSI drives, standard PC buses, motherboards

and memory SIMMs. High speed internal cache data transfer bus

(X-bus). Parity generation integrated into cache. Multiple

attached drive devices can be declared hot standbys.

Manageability: SNMP agent allows for remote monitoring and

management.

Advantages: Overall write performance is 25% to

90% better than single spindle performance and 1.5 to 6 times

better than other array levels. Host interfaces are scalable for

connectivity or increased host transfer bandwidth. Small reads in

multi user environment have very high cache hit rate resulting in

near zero access times. Write performance improves with an

increase in the number of drives in the array. Access times

decrease with each increase in the number of actuators in the

array. No extra data transfers required for parity manipulation.

RAID 7 is a registered trademark of Storage Computer

Corporation.

Disadvantages: One vendor proprietary solution.

Extremely high cost per MB. Very short warranty. Not user

serviceable. Power supply must be UPS to prevent loss of cache

data.

[ TOP ]

RAID 10 RAID 10

RAID 10: Very High Reliability

combined with High Performance

RAID Level 10 requires a

minimum of 4 drives to implement.

Advantages: RAID 10 is implemented as a striped

array whose segments are RAID 1 arrays. RAID 10 has the same fault

tolerance as RAID level 1. RAID 10 has the same overhead for

fault-tolerance as mirroring alone. High I/O rates are achieved by

striping RAID 1 segments. Under certain circumstances, RAID 10

array can sustain multiple simultaneous drive failures. Excellent

solution for sites that would have otherwise gone with RAID 1 but

need some additional performance boost.

Disadvantages: Very expensive / High overhead.

All drives must move in parallel to proper track lowering

sustained performance. Very limited scalability at a very high

inherent cost. Recommended Applications? Database server requiring

high performance and fault tolerance.

RAID 10 arrays are typically used in environments

that require uncompromising availability coupled with

exceptionally high throughput for the delivery of data located in

secondary storage. In recent years a number of mutations of RAID

10 have been developed with similar capabilities. This paper

presents one of the popular alternative implementations and

discusses the relative advantages and disadvantages of RAID 10 and

this alternative.

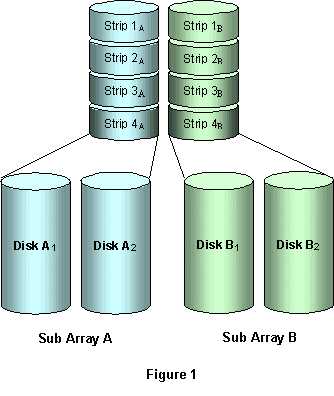

A RAID 10 array is formed using a two-layer

hierarchy of RAID types. At the lowest level of the hierarchy are

a set of RAID 1 sub-arrays i.e., mirrored sets. These RAID 1

sub-arrays in turn are then striped to form a RAID 0 array at the

upper level of the hierarchy. The collective result is a RAID 10

array. The figure below demonstrates a RAID 10 comprised of two

RAID 1 sub-arrays at the lower level of the hierarchy. They are

sub-arrays A (comprised of disks A1 and A2) and B (comprised of

disks B1 and B2). These two sub-arrays in turn are striped using

the strips 1A, 1B, 2A, 2B, 3A, 3B, 4A, 4B to form a RAID 0 at the

upper level of the hierarchy. The result is a RAID 10. Figure 1

illustrates a RAID 10 array, with each disk in the array

participating in exactly one mirrored set, thereby forcing the

number of disks in the array to be even.

Let us now look at some of the salient properties

of RAID 10. Consider a RAID 10 comprised of d disks and N mirrored

sets (i.e., constituent RAID 1 sub-arrays). Since each disk in the

array participates in exactly one mirrored set, d = 2N.

(a) RAID 10 arrays do not require any parity

calculation at any stage of their construction or operation.

(b) RAID 10 arrays are generally deployed in

environments that require a high degree of redundancy. The ability

to survive multiple failures is a fundamental property of RAID 10.

In fact the maximum number of disk failures a RAID 10 array can

withstand is d/2 = N.

What about the number of combinations of failed

disks that a RAID 10 array can sustain? The number of ways in

which k disks can fail is given by NCk ?2k, since there are NCk

ways in which to choose k mirror groups from N possible choices,

and 2 ways in which to choose a disk within each mirror group.

Therefore the total number of combinations of failed disks that a

RAID 10 can support is:

NC1 ?21 + NC2 ?22 + ? + NCN ?2N

= (2 + 1)N - 1

= 3N -

1

Thus, for a 4 drive RAID 10 containing 2 mirrored

sets, the number of combinations in which disks can fail without

the array being rendered inoperable is 32 - 1 = 8. In fact, these

combinations may be enumerated as follows, with each possible set

of failed disks listed within braces. They are: {A1}, {A2}, {B1},

{B2}, {A1, B1}, {A2, B2}, {A1, B2}, and {A2, B1}.

(c) RAID 10 ensures that if a disk in any

constituent mirrored set fails, its contents can be extracted from

the functioning disk in its mirrored set. Thus, when a RAID 10

array has suffered the maximum number of disk failures it is

capable of withstanding, its throughput rate is no worse than that

of a RAID 0 with N disks. In fact, any combination of N contiguous

independent strips can be read concurrently. The term "independent

strip" is used to denote a strip in a collection of strips that is

not a mirror of any other strip within that collection.

(d) A RAID 10 array that is in a nominal state

can improve the throughput of read operations by allowing

concurrent reads to be performed on multiple disks in the array.

For example, if the strips 1A, 1B, 2A, 2B are to be read from the

array given in figure 1, it is clear that all four strips can be

read concurrently from the disks A1, B1, A2 and B2

respectively.

[ TOP ]

RAID 1E RAID 1E

RAID 1E: While RAID 10 has been

traditionally implemented using an even number of disks, some

hybrids can use an odd number of disks as well. Figure 2

illustrates an example of a hybrid RAID 10 array comprised of five

disks; A, B, C, D and E. In this configuration, each strip is

mirrored on an adjacent disk with wrap-around. In fact this scheme

- or a slightly modified version of it - is often referred to as

RAID 1E and was originally proposed by IBM. Let us now investigate

the properties of this scheme.

RAID 1E: While RAID 10 has been

traditionally implemented using an even number of disks, some

hybrids can use an odd number of disks as well. Figure 2

illustrates an example of a hybrid RAID 10 array comprised of five

disks; A, B, C, D and E. In this configuration, each strip is

mirrored on an adjacent disk with wrap-around. In fact this scheme

- or a slightly modified version of it - is often referred to as

RAID 1E and was originally proposed by IBM. Let us now investigate

the properties of this scheme.

When the number of disks comprising a RAID 1E is

even, the striping pattern is identical to that of a traditional

RAID 10, with each disk being mirrored by exactly one other unique

disk. Therefore, all the characteristics for a traditional RAID 10

apply to a RAID 1E when the latter has an even number of disks.

However, RAID 1E has some interesting properties when the number

of disks is odd.

(a) Just as in the case of traditional RAID 10,

RAID 1E does not require any parity calculation either. So in this

category, RAID 10 and RAID 1E are equivalent.

(b) The maximum number of disk failures a RAID 1E

array using d disks can withstand is d/2 . When d is odd, this

yields a value that is the equal to that of a traditional RAID 10

while utilizing one additional disk. What about the number of

combinations of disk failures that RAID 1E can support. It turns

out that RAID 1E is very peculiar in this characteristic when d is

odd. Assume for the sake of notational convenience that d/2 = p.

Then the number of ways in which k disks can fail is d?P-1Ck-1,

since there are d ways to choose the first disk and P-1Ck-1 ways

to choose the remaining k-1 disks from p-1 possible choices.

Therefore, the total number of combinations of failed disks that

this scheme can support is:

d?p-1C0 + d?p-1C1 + ... + d?p-1Cp-1

= d ? (p-1C0 + p-1C1 +

? + p-1Cp-1)

= d ? 2p-1

Thus, for a 5 drive RAID 1E, the total number of

combinations in which disks can fail without the array being

rendered inoperable is 5?22-1 = 10. However, this result also

indicates that as the value of d increases, the ratio of the

number of combinations of disk failures supported by RAID 1E using

d disks decreases with respect to conventional RAID 10 using d-1

disks. In fact, for d > 9, RAID 1E yields a lesser number of

combinations! For instance, while a conventional RAID 10 using 10

disks can support 35 - 1 = 242 combinations of disk failures, RAID

1E using 11 disks can support only 11?25-1 = 176 combinations.

Clearly, RAID 10 is a superior choice when tolerance to a larger

number of combinations of disk failures is considered important.

An even more significant implication of this result is the

following. Since a RAID 1E with an even number of disks is

identical to a traditional RAID 10, A RAID 1E with 10 disks can

support more combinations of failures than a RAID 1E with 11

disks. In general, a RAID 1E with 2N disks can support more

combinations of failures that a RAID 1E with 2N + 1 disks, when N

5. In other words, it is always preferable to utilize an even

number of disks for your RAID 1E than an odd number if you desire

a higher tolerance to disk failures. In other words, it is always

preferable to use a traditional RAID 10!

(c) When a RAID 1E array suffers the maximum

number of disk failures it is capable of withstanding, i.e., d/2 ,

the number of contiguous independent strips that can be accessed

concurrently can be less than d/2 . For example, consider the RAID

1E array displayed in figure 2. Assume that disks A and C have

failed. In this scenario, it is clear that the contiguous strips

4, 5 and 6 cannot be read concurrently although three disks remain

operational. Thus the throughput of a RAID 1E with d disks - where

d is odd - may be no higher under specific access patterns than

that of a RAID 10 with d-1 disks when both arrays experience the

maximum number of sustainable disk failures.

(d) Just as in the case of a traditional RAID 10

implementation, RAID 1E in a nominal state can improve the

throughput of read operations by allowing concurrent reads to be

performed on multiple disks in the array. The fact that there are

more disks than there are mirror sets should intuitively suggest

as much.

Conclusion: RAID 1E offers a little more

flexibility in choosing the number of disks that can be used to

constitute an array. The number can be even or odd. However, RAID

10 is far more robust in terms of the number of combinations of

disk failures it can sustain even when using lesser number of

disks. Furthermore, a RAID 10 guarantees a throughput rate that is

always equal to that which is obtainable from the concurrent use

of all its functioning disks. In contrast, specific access

patterns may not lend themselves to the concurrent use of all

functioning disks under RAID 1E. Therefore, if extremely high

availability and throughput are of paramount importance to your

applications, RAID 10 should be the configuration of

choice.

[ TOP ]

RAID 50 (same as

RAID 05) RAID 50 (same as

RAID 05)

RAID 50 array is formed using a

two-layer hierarchy of RAID types. At the lowest level of the

hierarchy is a set of RAID 5 arrays. These RAID 5 arrays in turn

are then striped to form a RAID 0 array at the upper level of the

hierarchy. The collective result is a RAID 50 array. The figure

below demonstrates a RAID 50 comprised of two RAID 5 arrays at the

lower level of the hierarchy ? arrays X and Y. These two arrays in

turn are striped using 4 stripes (comprised of the strips 1X, 1Y,

2X, 2Y, etc.) to form a RAID 0 at the upper level of the

hierarchy. The result is a RAID 50.

Advantage: RAID 50 ensures that if one of the

disks in any parity group fails, its contents can be extracted

using the information on the remaining functioning disks in its

parity group. Thus it offers better data redundancy than the

simple RAID types, i.e., RAID 1, 3, and 5. Also, a RAID 50 array

can improve the throughput of read operations by allowing reads to

be performed concurrently on multiple disks in the set.

[ TOP ]

RAID 53 RAID 53

RAID 53: High I/O Rates and Data

Transfer Performance

RAID Level 53 requires a minimum of 5

drives to implement.

Advantages: RAID 53 should really be called "RAID

03" because it is implemented as a striped (RAID level 0) array

whose segments are RAID 3 arrays. RAID 53 has the same fault

tolerance as RAID 3 as well as the same fault tolerance overhead.

High data transfer rates are achieved thanks to its RAID 3 array

segments. High I/O rates for small requests are achieved thanks to

its RAID 0 striping. Maybe a good solution for sites who would

have otherwise gone with RAID 3 but need some additional

performance boost.

Disadvantages: Very expensive to implement. All

disk spindles must be synchronized, which limits the choice of

drives. Byte striping results in poor utilization of formatted

capacity.

[ TOP ] |